Scientists trick lidar units into failing to see pedestrians

By onAnnouncements | Technology

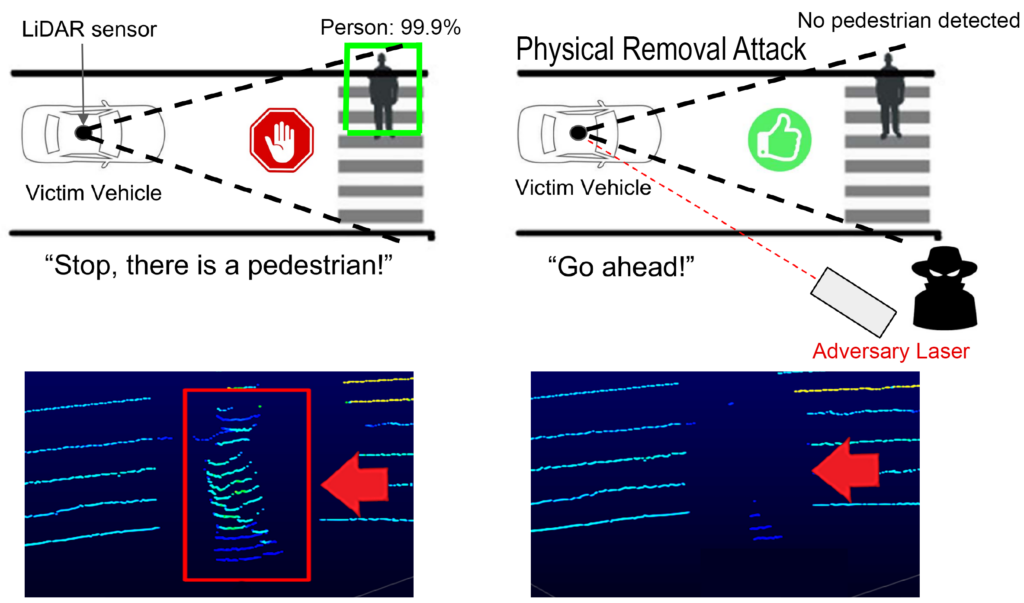

A team of scientists has published a paper demonstrating how it was able to trick lidar-based object detection systems into failing to see pedestrians and other obstacles.

The use of such spoofing techniques “causes autonomous vehicles to fail to identify and locate obstacles and, consequently, induces AVs to make dangerous automatic driving decisions,” the paper says.

The paper, titled “You Can’t See Me: Physical Removal Attacks on LiDAR-based Autonomous Vehicles Driving Frameworks,” was written by a team from the University of Florida, the University of Michigan, and the University of Electro-Communications in Japan. It is to be presented at the 2023 USENIX Security Symposium, scheduled for Aug. 9–11 in Anaheim, California.

In their moving vehicle scenarios, scientists were able to leverage “laser-based spoofing techniques” to selectively remove the lidar “point cloud data” of genuine obstacles. They say they were able to achieve a 92.7% success rate removing 90% of a target obstacle’s cloud points.

Repairer Driven News reached out to a number of lidar manufacturers for comment, and had received no responses as of deadline.

Lidar, which stands for “light detection and ranging,” works by firing a beam of laser light at an object, and then capturing the reflection of that beam. The paper’s authors note that perception systems used in AVs, such as lidars, cameras, and radars, are “fundamental elements of autonomy and the foundation of reliable automated decisions for driver safety.”

While previous research has shown that lidar can be fooled into detecting objects that do not exist, or failing to recognize real objects, the team said it discovered something new: how to trick lidar into deleting data about real obstacles.

“We mimic the lidar reflections with our laser to make the sensor discount other reflections that are coming in from genuine obstacles,” Sara Rampazzi, a UF professor of computer and information science and engineering who led the study, said in a statement. “The lidar is still receiving genuine data from the obstacle, but the data are automatically discarded because our fake reflections are the only one perceived by the sensor.”

Scientists demonstrated the attack on moving vehicles and robots with the attacker placed about 15 feet away on the side of the road. With upgraded equipment, they said, an attack could theoretically be mounted from a greater distance. They said the equipment needed is fairly basic, but that the laser must be perfectly timed to the lidar sensor and moving vehicles must be carefully tracked to keep the laser pointing in the right direction.

“It’s primarily a matter of synchronization of the laser with the lidar device. The information you need is usually publicly available from the manufacturer,” S. Hrushikesh Bhupathiraj, a UF doctoral student in Rampazzi’s lab and one of the lead authors of the study, said.

Tests showed the attacks were effective against three state-of-the-art camera-lidar fusion models, in which inputs from the different sensors are combined. Scientists were able to delete data for both static obstacles and moving pedestrians, and to follow a slow-moving vehicle using basic camera tracking equipment.

In simulations of autonomous vehicle decision making, the deletion of data caused a vehicle to continue accelerating toward a pedestrian, rather than stopping as it should.

The scientists say such attacks can be defended against, by updating the lidar sensors or the software that interprets the raw data. For instance, they said, the software could be taught to look for the telltale signatures of the spoofed reflections added by the laser attack.

“Revealing this liability allows us to build a more reliable system,” said Yulong Cao, a Michigan doctoral student and primary author of the study. “In our paper, we demonstrate that previous defense strategies aren’t enough, and we propose modifications that should address this weakness.”

More information

“You Can’t See Me: Physical Removal Attacks on LiDAR-based Autonomous Vehicles Driving Frameworks”

Images

Featured image: With the right timing, researchers say, lasers shined at autonomous vehicles’ lidar sensors can delete data about obstacles like pedestrians. (Provided by the University of Florida)

Illustration from “You Can’t See Me: Physical Removal Attacks on LiDAR-based Autonomous Vehicles Driving Frameworks,” authors Yulong Cao, S. Hrushikesh Bhupathiraju, Pirouz Naghavi, Takeshi Sugawara, Z. Morley Mao, and Sara Rampazzi.