Research advances replacing touchscreens with gesture-based vehicle interfaces

By onTechnology

With the goal of keeping drivers’ eyes on the road, software company Nvidia has applied artificial intelliegence (AI) to develop a set of 10 reliably identifiable hand gestures that could take the place of finger jabs at a vehicle touch screen.

In a paper titled “Towards Selecting Robust Hand Gestures for Automotive Interfaces,” the authors note that driver distraction is a serious threat to automotive safety, factoring in 10% of all police-reported crashes in 2013. One source of that distraction is the “visual-manual interface,” or touch screen, built into nearly all new vehicles.

Nvidia suggests that “automotive touchless, hand gesture-based” user interfaces (UIs) can help reduce driver distraction. The company evaluated 25 different gestures, and came up with a list of 10 “robust” gestures that vision-based algorithms and humans can reliably recognize.

As authors Shalini Gupta, Pavlo Molchanov, Xiaodong Yang, Kihwan Kim, Stephen Tyree, and Jan Kautz explain, there has been a fair amount of research on the use of automotive gesture UIs in recent years, but most has focused on “algorithms for hand gesture recognition,” rather than identifying a set of gestures that would work best.

“To the best of our knowledge, ours is the first work to address the important question of selecting robust gestures for automotive UIs from a system design perspective,” they said.

A 2014 study showed that users perceived gesture UIs as more secure, less distracting, and slightly more useful than touch screens. While they found gesture interfaces to be more desirable and worth buying, users also said they were less reliable than touch screens.

Ultraleap, a developer of hand tracking and haptics, said in a 2019 study that the automotive gesture control market was forecast to be worth over $13 billion by 2024. Among others, BMW, Mercedes-Benz, Volkswagen, Ford, Hyundai, Nissan, Toyota, and Lexus are all in the process of implementing gesture control systems, the company said.

“The choice of hand gestures in automotive UIs is an important design consideration which can greatly influence their success and widespread adoption,” the Nvidia study says.

It argues that hand gestures are superior to voice-based interfaces, which can be affected by ambient sounds in vehicles and cause audio distractions of their own. Gestures that can be performed with the hands on or close to the steering wheel “can result in low physical distraction,” the study says.

It argues that hand gestures are superior to voice-based interfaces, which can be affected by ambient sounds in vehicles and cause audio distractions of their own. Gestures that can be performed with the hands on or close to the steering wheel “can result in low physical distraction,” the study says.

A gesture-based interface can also be used for driver monitoring, which government and private safety advocates call crucial for the safe operation of Level 2 and up driver assistance systems.

In its testing, Nvidia found that the “shake hand”, “thumb up,” “close hand two times,” and “open hand” gestures were among the most accurately classified gestures for both humans and vision-based algorithms and that the gestures “move hand down” and “show two fingers” were among the least accurately classified gestures.

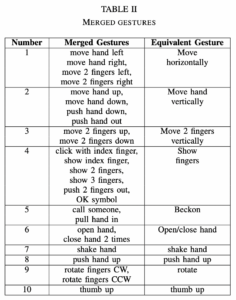

When they found confusion between two or more similar gestures, the researchers simply merged them into a single gesture. For instance, “rotate fingers clockwise” and “rotate fingers counter-clockwise” were merged into a single “rotate fingers” gesture.

The results “indicate agreement [between humans and machines] in correctly recognizing certain types of hand gestures versus others,” the study says. “It suggests the presence of inherent differences between the various gestures which render them less or more easily recognizable.”

Images

Featured image: Haptic technology developed by Ultraleap can be used to create a programmable tactile zone floating in mid-air above the central console. (Ultraleap)

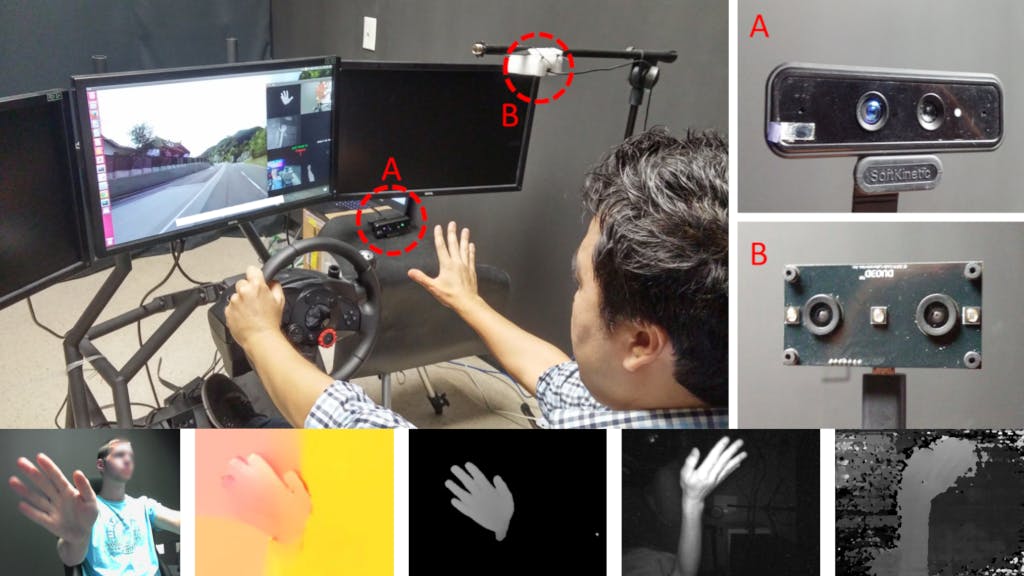

The driving simulator for data collection with the main monitor displaying simulated driving scenes and a user interface for prompting gestures, (A) a SoftKinetic depth camera (DS325) for recording depth and RGB data, and (B) a DUO 3D camera capturing stereo IR data. Both sensors capture 320×240 pixels at 30 frames per second. (Bottom row) Examples of each recorded modality, from left: RGB, optical flow, depth, IR-left, and IR-disparity. (Nvidia)