Innoviz claims new lidar can deliver L3 vehicle autonomy

By onAnnouncements | Technology

In getting to the next level of semi-autonomous driving, the capabilities and mounting positions chosen for lidar units are going to play a critical role, the CEO of one technology company said during a recent webinar on lidar standards for Level 3 autonomy.

Omer Keilaf, CEO of Innoviz Technologies, said during a recent webinar that his company’s solid state InnovizTwo lidar unit, unlike most now on the market, has the combination of cost, performance, reliability and size to make L3 highway driving commercially feasible.

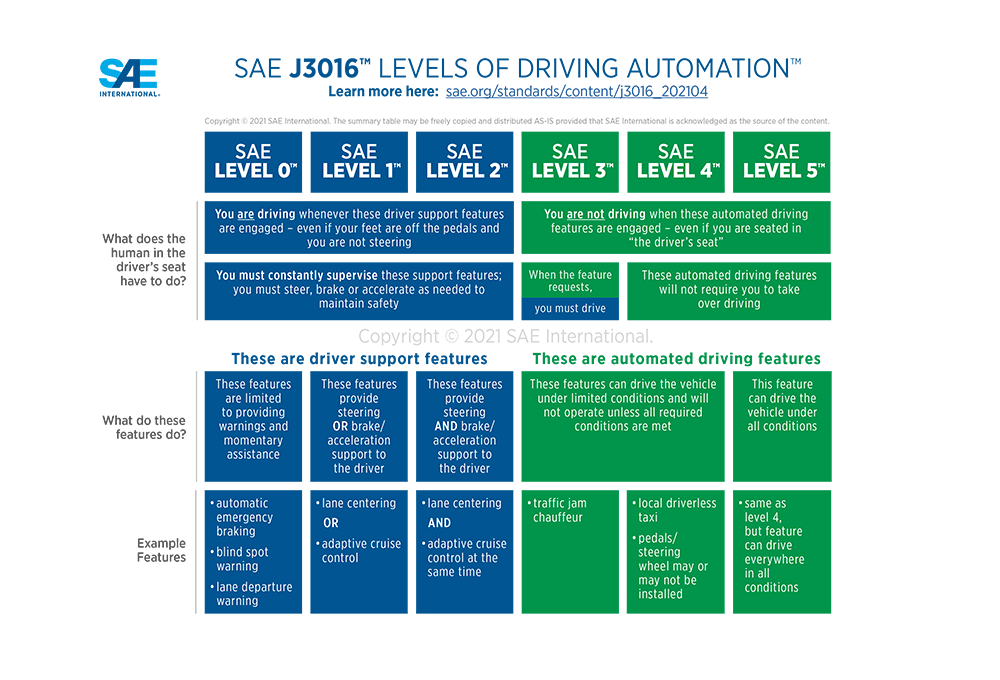

There is a “quantum leap” between “partial driving automation,” which ISO and SAE International classify as L2+, and the “high driving automation” of L3, Keilaf said.

“The main reason is because, at Level 2, the company doesn’t need to take any responsibility if something wrong happens. It’s the driver’s fault, even though the car is making driving decisions. So the driver needs to be ready to engage if something wrong happens,” Keilaf said.

At L3, the manufacturer takes responsibility. “They need more sensors, more processing power, and they need redundancy. So, when a car is driving autonomously, it cannot rely on a sensor that might degrade—it could be low light conditions, direct sunlight, or rain. And for that reason, you need to have sensors that can complement each other.”

At L3, the manufacturer takes responsibility. “They need more sensors, more processing power, and they need redundancy. So, when a car is driving autonomously, it cannot rely on a sensor that might degrade—it could be low light conditions, direct sunlight, or rain. And for that reason, you need to have sensors that can complement each other.”

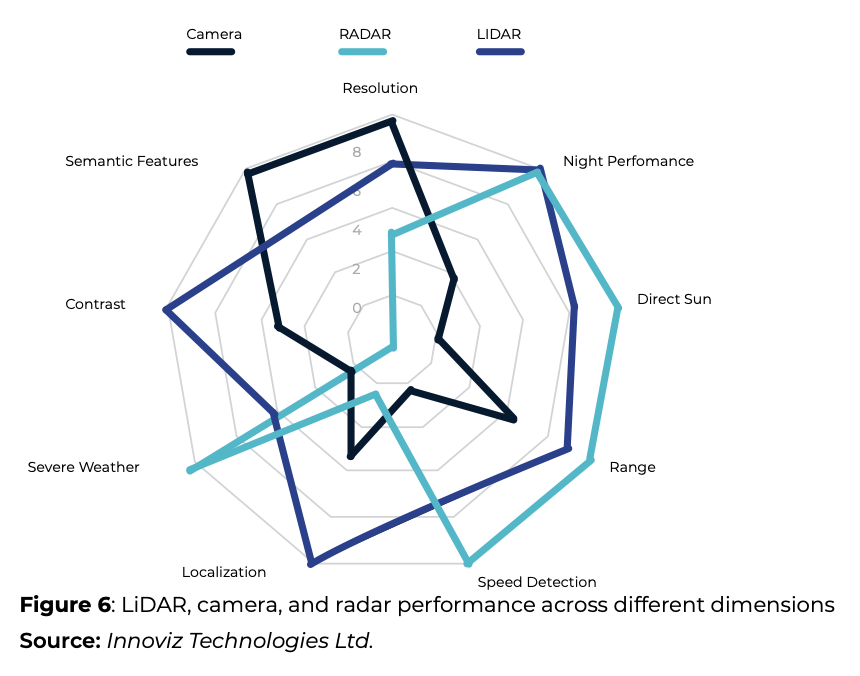

The addition of lidar to the more commonly used technologies of cameras and radar units is generally acknowledged in the industry to be the crucial to self-driving capability. General Motors expects to be the first to bring lidar technology into the mainstream, with the Ultra Cruise system it plans to offer on certain models in 2023.

Lidar, which stands for “light detection and ranging,” complements cameras and radar because it functions well under conditions that challenge the other technologies. It works by firing a beam of laser light at an object, and then capturing the reflection of that beam, providing a three-dimensional picture of objects around the vehicle known as a “point cloud,” showing where the objects are headed and how fast they’re moving.

The vehicle uses information from the various sensors in its driving decision-making, in a process defined as “sensor fusion.”

Keilaf suggested that the most economical way for automakers to get to L3 is to equip their vehicles with the technology needed for that level of operation, but allow them to operate at L2 or L2+ only, using the opportunity to crowdsource data in real time.

Keilaf suggested that the most economical way for automakers to get to L3 is to equip their vehicles with the technology needed for that level of operation, but allow them to operate at L2 or L2+ only, using the opportunity to crowdsource data in real time.

“The car maker can leverage [the technology investment] by collecting data, validating, and basically measuring the level of waste that exists in the system and improve it over time by doing software upgrades [over the air]. And that’s a good way to accelerate the process,” he said.

Keilaf restricted his analysis to highway driving, rather than urban scenarios, which present a greatly different set of challenges. Restricting L3 vehicles mostly to highways “would simplify overall autonomous system requirements and costs, eliminate a huge daily risk from our lives, and reduce the need for human control of a car for many hours every day,” Keilaf said in an accompanying whitepaper.

The capabilities required for highway driving are “mostly related to the speed of the car. Obviously, the faster the car is going to drive autonomously, it needs more time to react. It means that it needs to see further away, means that it needs to predict and understand the scene better in order to drive safely,” he said.

While many types of lidars have been developed, Keilaf said, most are not able to meet the requirements for highway L3 driving because of issues of cost, performance, reliability and size.

In order to be successful, L3 systems will have to be not only safe, but will have to give the occupants a feeling of comfort, Keilaf said. In other words, though driving characteristics like frequent, sudden stops or directional changes might keep a vehicle from crashing, they will not be acceptable to consumers.

Keilaf suggested that there’s some misunderstanding in the industry about what capabilities are most important in a lidar unit.

“The market is very obsessed with range. You’ll see everybody’s talking about how far the lidar can see,” he said. And yet, he added, the single most important parameter is actually the sensor’s vertical resolution.

“One of the greatest challenges for an autonomous vehicle is to detect a small, extremely low reflectivity object, such as a tire, at long distance while driving at highway speeds,” according to the whitepaper. “Many trade-offs are required in order to ensure safe braking and avoid a crash.”

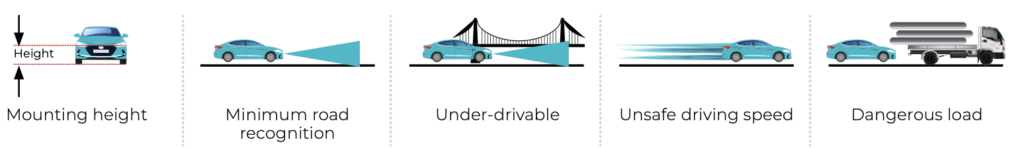

Where the lidar unit is placed on the vehicle makes a significant difference, Keilaf said. If it’s mounted in the grille, then the total vertical field of view (VFOW) needs to be 25 to 40 degrees. But if it’s mounted on the roof, a lower VFOW would be needed, because since detection of objects is easier and the hood blocks the downward view.

Innoviz suggests that OEMs consider all possible mounting locations in all models in which the lidar units will be installed.

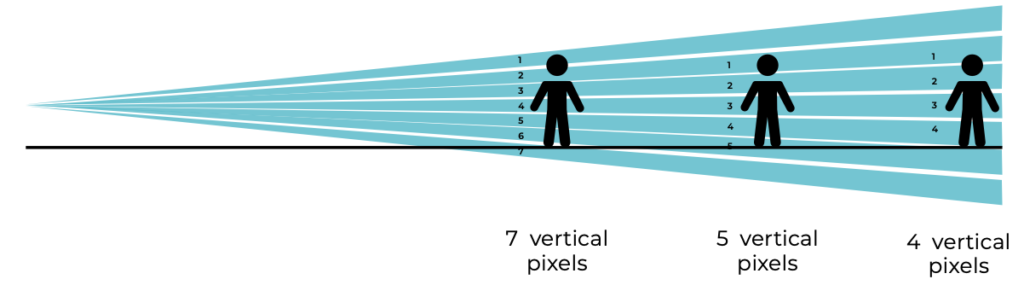

The resolution of the unit also plays a crucial role. For the vehicle’s software to detect and classify a person in its field of view, at least eight pixels—two horizontal and four vertical—are needed.

The lidar unit’s ability to stop the car at a safe distance, if necessary, is another consideration. The sensor needs to be able to differentiate various objects—pedestrians, bicycles, trucks—at that distance with an acceptable level of certainty.

In order to avoid “false alarms,” Keilaf said, at least two vertical pixels must be detected across multiple frames—one pixel is simply not enough. In real world performance, this means that, in order to recognize an object at least 14 centimeters high at a safe stopping distance of 100 meters, the vehicle’s speed would be limited to 130 kph, or about 81 mph, on a dry road.

Environmental conditions like rain or snow, lighting, road conditions and blockage of the sensor can also reduce the system’s performance and increase required braking time, Keilaf said.

The lidar unit’s frame rate, the number of pixels that are refreshed every second, is also important; the higher the frame rate, the shorter the reaction time and movement detection.

For lidar makers, Keilaf said, the challenge is to combine high resolution, frame rate and VFOV in a unit that is compact, efficient, rugged and affordable.

More information

Innoviz whitepaper

Click to access Designing-a-Level-3-LiDAR-for-Highway-Driving_31Jan22.pdf

Images

Featured image: the InnovizTwo lidar unit. (Provided by Innoviz)

SAE levels of driving automation. (Provided by SAE)

Lidar, camera and radar performance under different conditions. (Provided by Innoviz)

Considerations involved in urban and highway use of lidar. (Provided by Innoviz)

Vertical field of view (VFOW) considerations for lidar use. (Provided by Innoviz)

At least four vertical pixels are needed for a system to recognize a pedestrian. (Provided by Innoviz)