IIHS: Drivers don’t understand limits of Level 2 systems, particularly Autopilot

By onAnnouncements | Business Practices | Education | Market Trends | Repair Operations | Technology

New research by the Insurance Institute for Highway Safety found some drivers misunderstand the capabilities of the more advanced ADAS systems on the market, findings with possible ramifications for collision repairers.

The systems in question are examples of SAE Level 2 autonomy, a technological level perhaps most famously represented by Tesla’s Autopilot and Cadillac’s Super Cruise. The IIHS indeed studied drivers’ perceptions of both of these terms, along with Nissan’s ProPilot Assist, BMW’s Driving Assistant Plus, and both Audi and Acura’s Traffic Jam Assist.

SAE Level 2 refers to a system which can control its direction both forward and backward and side to side. Level 1 involves ADAS doing one of these things, but not both. (For example, adaptive cruise control without lane-keeping or centering-abilities.) However, the human is still always in charge of monitoring the road.

“None of these systems reliably manage lane-keeping and speed control in all situations,” the IIHS wrote about the five system names on Thursday. “… All of them require drivers to remain attentive, and all but Super Cruise warn the driver if hands aren’t detected on the wheel. Super Cruise instead uses a camera to monitor the driver’s gaze and will issue a warning if the driver isn’t looking forward.”

According to the IIHS, no mass-market vehicle advances to SAE Level 3 autonomy, where the driver can actually quit paying attention but must be ready to be a fallback if the system encounters something it can’t handle. Human nature and reaction time might make this a dangerous proposition in some cases.

In Level 4, the human isn’t necessary; the vehicle can self-drive just fine — but within in a designated set of conditions. (For example, a well-mapped portion of a city at speeds 20 mph or less.) Level 5 is the vehicle able to go on-road anywhere an average human driver could reasonably go.

The IIHS asked survey participants about the names of the various Level 2 systems without specifying which OEM was tied to the brand. (For example, “Autopilot” rather than “Tesla Autopilot.”)

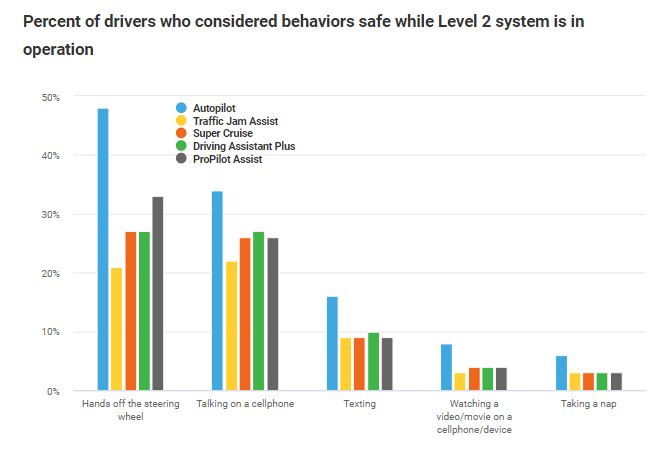

The term “Autopilot” was far more misunderstood than the other brand names, according to the IIHS.

“When asked whether it would be safe to take one’s hands off the wheel while using the technology, 48 percent of people asked about Autopilot said they thought it would be, compared with 33 percent or fewer for the other systems.,” the IIHS wrote. “Autopilot also had substantially greater proportions of people who thought it would be safe to look at scenery, read a book, talk on a cellphone or text. Six percent thought it would be OK to take a nap while using Autopilot, compared with 3 percent for the other systems.”

It’s also ironic that equal or greater proportions of subjects associated three other systems besides Super Cruise with the ability to take one’s hands off the wheel.

At least a quarter of participants also felt it was OK to talk on a cellphone with the various advanced ADAS systems in operation, though the IIHS itself admits the evidence is “mixed” that talking on a phone increases crash risk.

But texting is certainly dangerous, and 16 percent of drivers felt it’d be OK to do so with Tesla’s Autopilot. Around 10 percent felt that’d be fine under the other systems, too.

Some survey participants also felt you could take a nap or watch a movie while the various systems were operable.

“The name ‘Autopilot’” was associated with the highest likelihood that drivers believed a behavior was safe while in operation, for every behavior measured, compared with other system names,” the IIHS wrote. “Many of these differences were statistically significant.”

Contacted for comment about the results, Tesla replied in a statement:

This survey is not representative of the perceptions of Tesla owners or people who have experience using Autopilot, and it would be inaccurate to suggest as much. If IIHS is opposed to the name “Autopilot,” presumably they are equally opposed to the name “Automobile.”

Tesla provides owners with clear guidance on how to properly use Autopilot, as well as in-car instructions before they use the system and while the feature is in use. If a Tesla vehicle detects that a driver is not engaged while Autopilot is in use, the driver is prohibited from using it for that drive.

According to the IIHS, not many of the participants had ADAS experience, and even fewer had Level 2 systems like those studied.

“There was less variation observed among the other four SAE Level 2 system names when compared with each other,” the IIHS wrote. “A limited proportion of drivers had experience with advanced driver assistance systems: 9–20% of respondents reported having at least one crash avoidance technology such as forward collision warning or lane departure warning, and fewer of these reported driving a vehicle in which Level 2 systems were available. Drivers reported that they would consult a variety of sources for information on how to use a Level 2 system.”

On one hand, the study suggests driver confusion could lead to inattention, which in turn could lead to collisions. This could help keep shops stocked with business.

Prior IIHS research has found the disparate ADAS functions — lane keeping, adaptive cruise control, etc — which make up these more automated forms of driving reduce crashes. The IIHS also in 2018 reported Version 1 Autopilot reduced third-party physical damage claims and first-party collision claims.

So a bunch of drivers thinking they can text or read a book while such systems are engaged could push these frequencies back up and soften the volume blow to shops. (Of course, the driver confusion reported Thursday might already have existed in the real-world population represented in those 2018 Autopilot results. This would mean shops couldn’t expect a frequency bump at all. )

Dashboard indicators

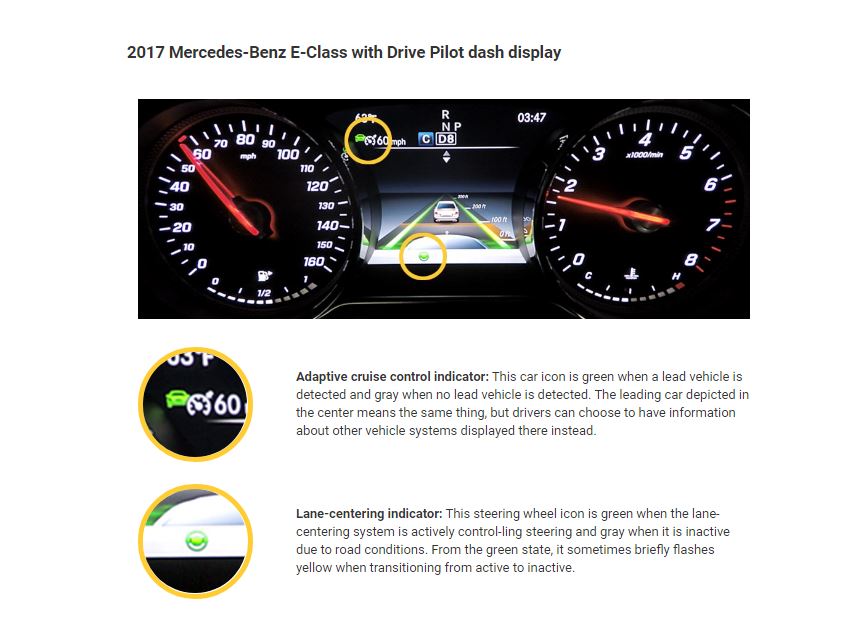

Another IIHS study announced Thursday found many drivers confused about the dash indicators related to Mercedes’ Level 2 Drive Pilot system on a 2017 E-Class.

The 80 test subjects watched videos of the dash and were asked about the adaptive lane control and lane-centering features. Half of them received some training about the system, while the other 40 didn’t.

“In the study, certain key pieces of information eluded many of the participants,” the IIHS wrote. “While almost everyone was able to understand when adaptive cruise control had adjusted the vehicle speed or detected another vehicle ahead, most participants, regardless of whether they received the training, struggled to understand what was happening when the system didn’t detect a vehicle ahead because it was initially beyond the range of detection.

“Most of the people who didn’t receive training also struggled to identify when lane centering was inactive. In the training group, many people got that right. However, even in that group, participants often couldn’t explain why the system was temporarily inactive.”

This could mean more crashes if the driver doesn’t notice or understand the inputs.

“If your Level 2 system fails to detect a vehicle ahead because of a hill or curve, you need to be ready to brake. Likewise, when lane centering does not work because of a lack of lane lines, you need to steer,” IIHS President David Harkey said in a statement. “If people aren’t understanding when those lapses occur, manufacturers should find a better way of alerting them.”

ADAS and roads

Ironically, a third ADAS study announced Thursday found that “for the most part, drivers use the technology where it was intended,” according to the IIHS.

That research looked at a Ranger Rover Evoque with adaptive cruise control and a Volvo S90 with Pilot Assist, which delivers a Level 2 adaptive cruise control and lane centering capability. The S90’s lane centering actually was improved during the study thanks to a software upgrade, and the IIHS and MIT researchers accounted for this.

“In the study, 40 percent of interstate and other freeway miles of Evoque drivers were driven using adaptive cruise control,” the IIHS wrote Thursday. “Before the software update, 11 percent of interstate/freeway miles in the S90 were driven with adaptive cruise control alone and another 11 percent were driven with the Level 2 Pilot Assist system. After the update, those numbers were 8 percent and 20 percent, respectively.”

But the study also led to some more good news for shops. Some drivers used the technology where they probably shouldn’t.

The IIHS wrote that Evoque drivers used adaptive cruise control on 7 percent of “nonfreeway principal arterial miles” — which could mean intersections the ADAS couldn’t handle as well. Eleven percent of the S90’s nonfreeway miles occurred with the upgraded Pilot Assist active.

At least one driver didn’t use the Evoque’s adaptive cruise control or the S90’s Pilot Assist at all outside of the freeway, according to the IIHS and MIT. (At least one S90 driver didn’t even use the lower-level adaptive cruise control on either the freeways or arterials.)

However, some motorists were all about using the tech outside of the freeway: “One Evoque driver drove 41 percent of nonfreeway principal arterial miles with adaptive cruise control, and one post-update S90 driver used Pilot Assist for 30 percent,” the IIHS wrote.

“Driving automation could reduce crashes by eliminating some of the potential for human error,” IIHS senior research scientist Ian Reagan said in a statement. “But given the low use of the systems and the fact that most vehicles on the road today still don’t have these features, we don’t expect to see these crash reductions any time soon.”

Even if such incorrect behavior produces the same or more crashes on cars with the technology, it might still pose a problem for shops.

Autopilot lawsuit

The bad news for shops here could be if confused, inattentive or negligent consumers pursue litigation against a former repairer of the vehicle following a crash with a Level 1 or Level 2 system engaged. The driver’s attorney argues the shop’s work kept the system from avoiding the crash anyway.

Whether such an allegation has any merit is irrelevant. The shop still has to expend time, energy and money dealing with the case. That’s not a prospect any business owner relishes. Complying with OEM procedures and proving you did so is probably the best way to be done with the matter as quickly as possible.

Such litigation has already arisen in the form of the Lommatzsch v. Tesla litigation.

The South Jordan, Utah, Police Department cited plaintiff Heather Lommatzsch for failure to keep proper lookout after her 2016 Model S on Autopilot plowed into a truck at 60 mph in May 2018.

“About 1 minute and 22 seconds before the crash, she re-enabled Autosteer and Cruise Control, and then, within two seconds, took her hands off the steering wheel again,” the South Jordan Police Department wrote, citing Tesla’s review of vehicle data. “She did not touch the steering wheel for the next 80 seconds until the crash happened; this is consistent with her admission that she was looking at her phone at the time.”

Nevertheless, Lommatzsch sued Tesla, arguing its sales force told her the vehicle would stop on its own and she only needed to touch the wheel once in a while while in Autopilot. She claimed Tesla should have communicated Autopilot’s limitations better.

But she also sued Service King, which “provided service to the Tesla Model S and had replaced a sensor on the Tesla Model S.”

Service King and Tesla have denied all of the allegations. Tesla said its potential defenses could include a “Plaintiff or some other third-party’s misuse, abuse, unauthorized alteration or modification of, or failure to maintain the subject vehicle” being responsible.

More information:

“New studies highlight driver confusion about automated systems”

Insurance Institute for Highway Safety, June 20, 2019

“Is automation used where it’s intended?”

IIHS, June 20, 2019

NASTF OEM repair procedures portal

SAE, Dec. 11, 2018

Images:

The Navigate on Autopilot user interface for a Tesla Model 3 is shown in a Nov. 1, 2018, handout photo. (Provided by Tesla)

New research by the Insurance Institute for Highway Safety found some drivers misunderstand the capabilities of the more advanced ADAS systems on the market, findings with possible ramifications for collision repairers. The biggest confusion seemed to surround the term “Autopilot.” (Provided by the Insurance Institute for Highway Safety)

Insurance Institute for Highway Safety Highway Loss Data Institute research has determined that numerous common advanced driver assistance systems are effective in reducing crashes. (Provided by Insurance Institute for Highway Safety)

New research by the Insurance Institute for Highway Safety found some drivers unclear about the dash indicators with the 2017 Mercedes E-Class’ Drive Pilot system. (Provided by the Insurance Institute for Highway Safety)

The South Jordan, Utah, Police Department cited plaintiff Heather Lommatzsch for failure to keep proper lookout after her 2016 Model S on Autopilot plowed into a truck at 60 mph in May 2018. (Provided by South Jordan Police Department)